- HOME

- NEWS

- CASE STUDIES

- SPECIALTIES

Qualitative Research

Get Up Close and Personal with Niche Audiences

Custom Research

Answer Tactical Business Questions

Data Collection

Connecting Brands with Consumers

Cannabis Research

The New Era of Cannabis is Driven by Data

- PRODUCTS

- EXPLORE

Insight Communities

Build a more customer-centric business with our simple, integrated insight community platform.

What is an Insight Community?

Work Quickly

An insight community is an online research ecosystem that enables brands to gather continual and fast feedback from their target audience. Our insight community platform enables companies to make smarter, data-driven, and customer-centric decisions with integrated qualitative and quantitative consumer feedback tools. As the fastest-growing Market Research methodology, online community research brings brands and consumers into a collaborative partnership where organizational strategy and direction are always supported by market insights.

Ideation Sessions

Crowdsource ideas with online community research. Commenting, rating, and voting help you prioritize ideas and drive on-target innovation.

Focus Groups

Interactive discussions inside an insight community capture in-depth customer insights. Collect text, images, or video on the soccer field, in a store, at home – anywhere.

Surveys

Get answers fast. Deploy powerful, engaging consumer feedback surveys across any device in minutes. View and securely share real-time results.

Drag the arrow to reveal.

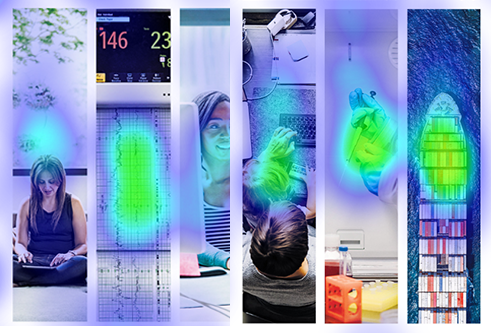

Heat Maps

Test ad campaigns, print, copy, and other concepts using our heat mapping tool. Members can drop “markers” with comments on your copy giving you visual insights on your campaign and design initiatives.

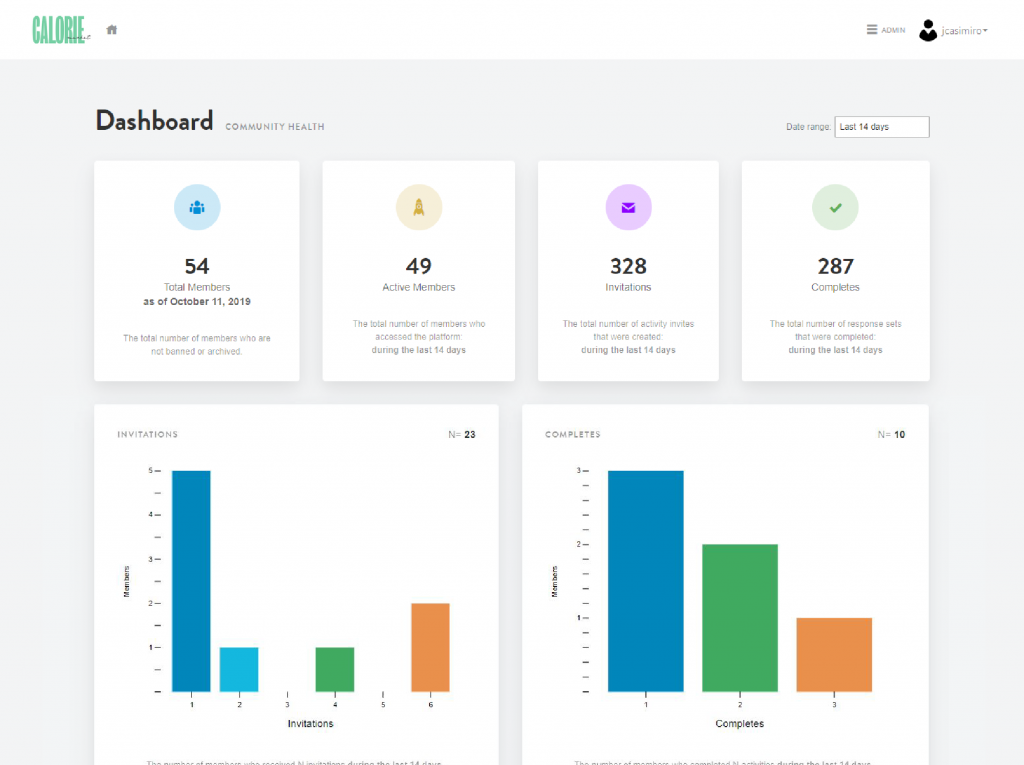

Community Dashboard

We can help keep your community healthy and engaged by monitoring member responsiveness, ensuring the best overall member experience.

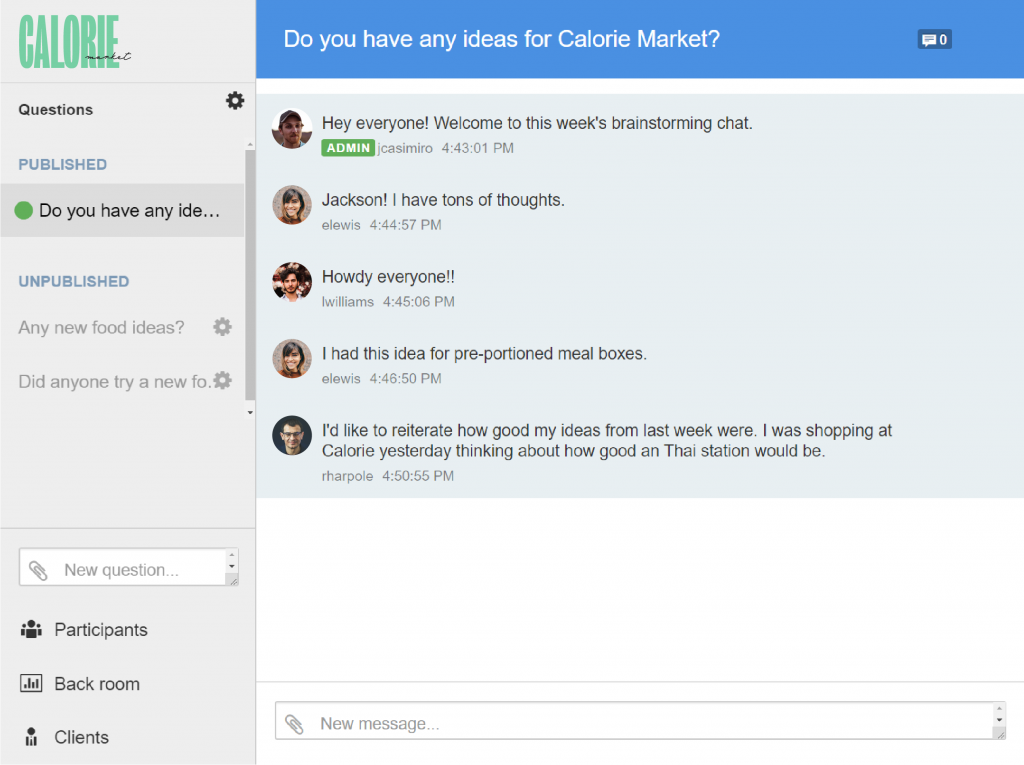

Live Chat

Use our live chat tool to run a synchronous focus group. Probe and ask follow-up questions to dive deeper, walking away with richer instant insights.

Project Tiers

D.I.Y.

Managed Community

Full Service

Drag and Drop Customization

Brand your community with your logo, colors, and background images. No coding needed.

Mobile First

Collect product and consumer feedback across any device or operating system — phone, tablet, or desktop — with our responsive platform.

Built-In Incentives

Integrated access to a diverse offering of online gift cards. Grant members redeemable points at the click of a button.

Launch Support

We set up and launch a customized online research community for you, with full branding, team training, and content creation.

Recruitment

We’ll draft your screener, create user profile surveys, and help you leverage databases, social media, and website traffic to ensure you get the right people, right away.

Engagement

We provide you with monthly health reports, activity planning, research roadmaps, member support, and ongoing community optimization.

Project Management

We work with you to understand your business objectives and create a research roadmap for your company’s unique needs. Working together, we will gather the right insights in the right timeframe.

Survey and Guide Development

Off-load the burden of project set up & design to our full service research team. We can design an activity instrument that addresses your mission critical questions and make sure you maximize your insight and strategy.

Fieldwork and Moderation

Rely on our dedicated research team to field and moderate your study. From launch to close and everything in between, we’ve got you covered.

Reporting and Presentation

We deliver in-depth analysis, voice-of-customer highlights, and boardroom-ready presentations to share with your stakeholders.

Additional Services

Bring Your Data to Life

Partner with our experienced researchers to gain helpful context and actionable insights.

Clients